The rapid advancement of artificial intelligence (AI) technology, particularly generative AI, presents immense opportunities for organisations across industries to innovate, increase efficiency, and improve products and services. However, the deployment of AI systems also introduces significant risks and governance challenges that must be carefully navigated. As organisations move from initial excitement and pilots to scaling AI initiatives, they must establish robust governance frameworks to manage ethical, legal, and technical risks while fostering responsible innovation.

Recent research and case studies provide valuable insights into the key components of effective AI governance. The experience of AstraZeneca in operationalising AI governance highlights common challenges, such as balancing innovation and risk, defining AI scope, harmonising standards across decentralized organisations, and measuring success. Best practices identified through this research include:

- integrating AI governance with existing structures

- using pragmatic terminology focused on risk assessment and

- investing in continuous education and change management to embed ethical AI practices within the organisational culture.

These best practices, while sourced from large multi-nationals, have applicability for other organisations, in particular for mid-sized and mission-based organisations. As noted by experts in the field, these organisations are increasingly exploring diverse AI applications driven by resource constraints but must prioritise ethical considerations and establish clear policies and oversight mechanisms. A proactive approach to developing AI governance frameworks is essential to maintain trust and mitigate risks.

For them, responsible AI governance is particularly crucial to ensure that AI systems align with their values and advance their social impact goals. Counterintuitively, these organisations tend to have a higher risk appetite, so using risk as the main driver for governance can be less effective than focusing on the value delivered by these initiatives, which of course helps ensure the business case for innovation can overcome the hurdles which arise towards putting innovations into production.

A recent Deloitte survey found that while 67% of organisations reported increased AI investments due to strong early results, many struggle to scale experiments into production and measure the impact on business outcomes. To unlock the benefits of AI, organisations must prioritise use cases that align with their mission, invest in data management and quality, and establish KPIs to track performance and value creation.

Another critical aspect of AI governance is navigating the evolving regulatory landscape. As governments and international bodies develop AI standards and guidelines, organisations must ensure compliance and demonstrate responsible practices. The Australian government published a set of principles for ethical AI, and a national framework for government departments and agencies. The CSIRO also published an extremely comprehensive responsible AI pattern catalogue which helps define a common conceptual language that organisations can use to orient their efforts in governance.

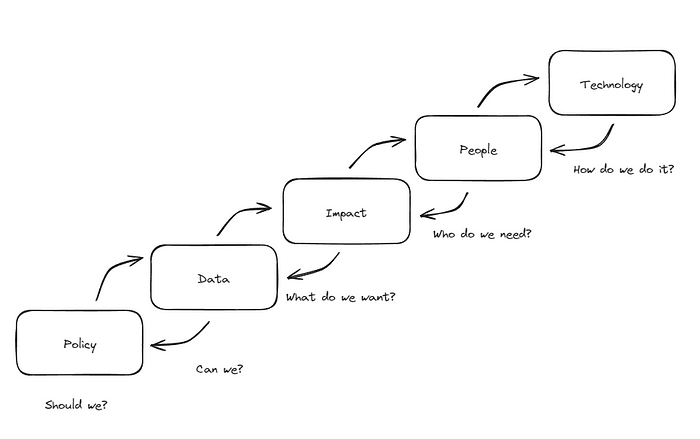

Drawing from these insights, a proposed framework for AI governance in mission-based organisations consists of the following key components:

This framework should be approached sequentially, starting at the bottom with Policy, and then work upwards towards Technology. Each step should be coherent and consistent with all other steps, with revisions made as required across all steps to maintain an integrated logic.

The steps are:

- Policy — Does this initiative align with the organisation’s mission and purpose? If you ask stakeholders the question “should we do this”, will they respond positively? Do we have clarity on our organisational/corporate policies relating to this initiative and their coverage for what we plan to do? Do they need to change?

- Data — Is the data required to achieve this initiative technically available? Are you able to use it? Are there privacy or other information risks? This is important as most scaling to production issues arise from assumptions about the quality or quantity of data.

- Impact — Is this use case compelling enough? Will it change things enough to overcome resistance and disbelief? What will you get by doing this? What will change? What will stay the same?

- People — Do we have the right people to make this happen? Who is going to be changed by this initiative, and what do they need?

- Technology — Is this actually possible? Does the technology align with the organisation’s technical environment? What is the concept for how this might work?

This integrated approach moves beyond just looking at risks and finding reasons why not to do something. By teasing out some of the implementation details early, and by maintaining focus on the key stumbling blocks (i.e. policy and data), this approach should ensure a more successful path forward.

Some next steps for implementing this AI governance framework for mission-based organisations could be:

- Evaluate potential use cases: The opportunity for generative AI is not necessarily in the high value/high volume processes. If you are not looking at these already, then quit this article now and fix those first. However, there are always that long tail of complex and cumbersome processes which are the ideal hunting grounds for potential initiatives. Look here for things which require reasoning, translation (not necessarily language, but could be between domains or concepts), or processing of large unstructured data sets (think summaries).

- Assess your readiness to tackle this problem: Are you fighting fires and just trying to keep the lights on? If so, this isn’t the time for experimenting with AI. Make sure you have the time and space to make this happen.

- Engage leadership and gain buy-in: Make sure your stakeholders are ready to go.

- Establish a cross-functional team: Do not outsource this. You don’t need consultants right now. You need to build a network within the organisation that will bring these initiatives to light.

- Prioritise and execute: Create a simple roadmap and plan. Start with “conscious tinkering” and move towards more sophisticated approaches as you gain the maturity. Get some help from consultants if you need it, but make sure to retain the learning obtained from these initiatives.

- Iterate: Use your cross-functional team to learn and adapt. Build a virtuous cycle.

- Communicate and engage: Regularly communicate progress, successes, and challenges with AI governance to all stakeholders. Engage employees, beneficiaries, and partners in ongoing dialogue and collaboration to ensure the framework remains relevant and effective.

By embracing a proactive and comprehensive approach to AI governance, mission-based organisations can confidently harness the transformative potential of AI while mitigating risks and ensuring alignment with their values.